|

Yanhong Zeng 曾艳红 Yanhong Zeng is a Research Scientist & Engineer at Ant Group, specializing in efficient generative systems. Previously at Shanghai AI Lab, she served as the Lead Core Maintainer of MMagic. Her work bridges the gap between research and production, developing high-quality, controllable, and scalable multi-modal models, with a current focus on world models and streaming video generation.

💗 Hiring: looking for self-motivated interns to work on Generative AI! Email / Google Scholar / Twitter / Github / Linkedin |

|

News

|

Selected Publications |

|

Reward Forcing: Efficient Streaming Video Generation with Rewarded Distribution Matching Distillation

Yunhong Lu, Yanhong Zeng†, Haobo Li, Hao Ouyang, Qiuyu Wang, Ka Leong Cheng, Jiapeng Zhu, Hengyuan Cao, Zhipeng Zhang, Xing Zhu, Yujun Shen, Min Zhang Arxiv, 2025 project page / arXiv / code

Reward Forcing is a new real-time streaming video generation framework with novel memory design and a rewarded distribution matching distillation method for better dynamic generation. |

|

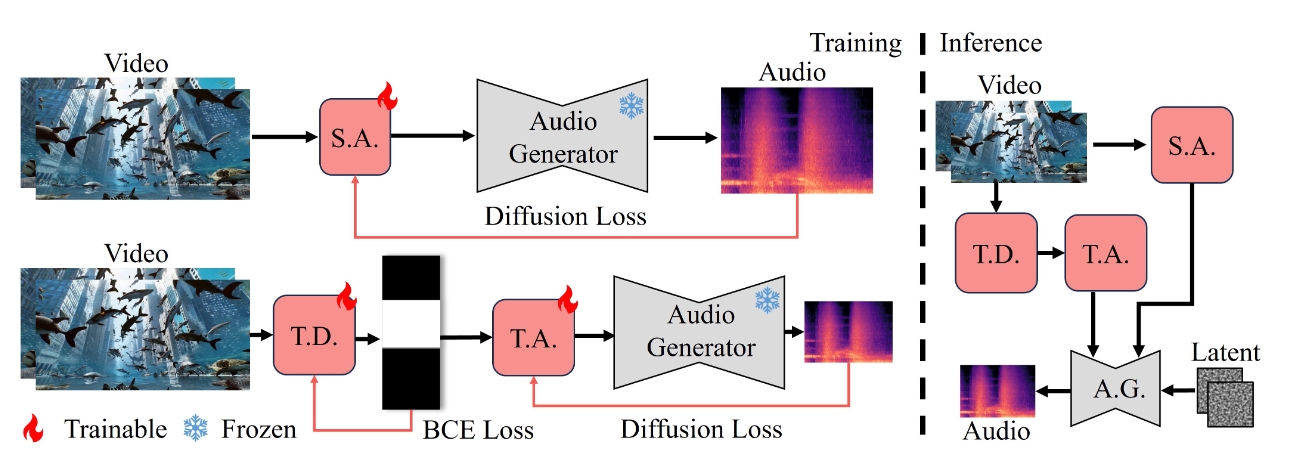

FoleyCrafter: Bring Silent Videos to Life with Lifelike and Synchronized Sounds

Yiming Zhang, Yicheng Gu, Yanhong Zeng†, Zhening Xing, Yuancheng Wang, Zhizheng Wu, Kai Chen IJCV, 2025 project page / video / arXiv / demo / code

FoleyCrafter is a text-based video-to-audio generation framework which can generate high-quality audios that are semantically relevant and temporally synchronized with the input videos. |

|

Live2Diff: Live Stream Translation via Uni-directional Attention in Video Diffusion Models

Zhening Xing, Gereon Fox, Yanhong Zeng†, Xingang Pan†, Mohamed Elgharib, KChristian Theobalt , Kai Chen Arxiv, 2024 project page / video / arXiv / demo / code

Live2Diff is the first attempt that enables uni-directional attention modeling to video diffusion models for live video steam processing, and achieves 16FPS on RTX 4090 GPU. |

|

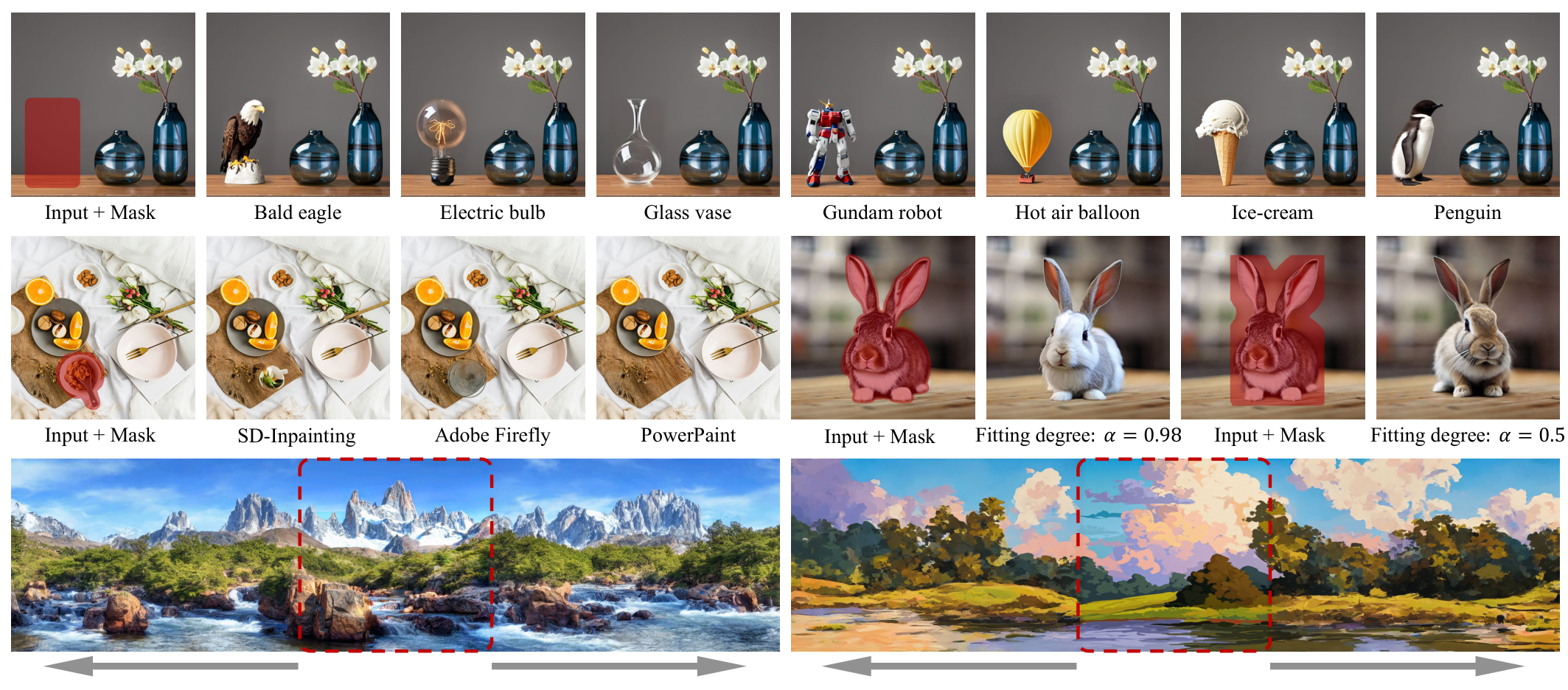

A Task is Worth One Word: Learning with Task Prompts for High-Quality Versatile Image Inpainting

Junhao Zhuang, Yanhong Zeng†, Wenran Liu, Chun Yuan, Kai Chen ECCV, 2024 project page / video / arXiv / demo / code

PowerPaint is the first versatile inpainting model that achieves SOTA in text-guided and shape-guided object inpainting, object removal, outpainting, etc. |

|

PIA: Your Personalized Image Animator via Plug-and-Play Modules in Text-to-Image Models

Yiming Zhang*, Zhening Xing*, Yanhong Zeng†, Youqing Fang, Kai Chen CVPR, 2024 project page / video / arXiv / demo / code

PIA can animate any images from personalized models by text while preserving high-fidelity details and unique styles. |

|

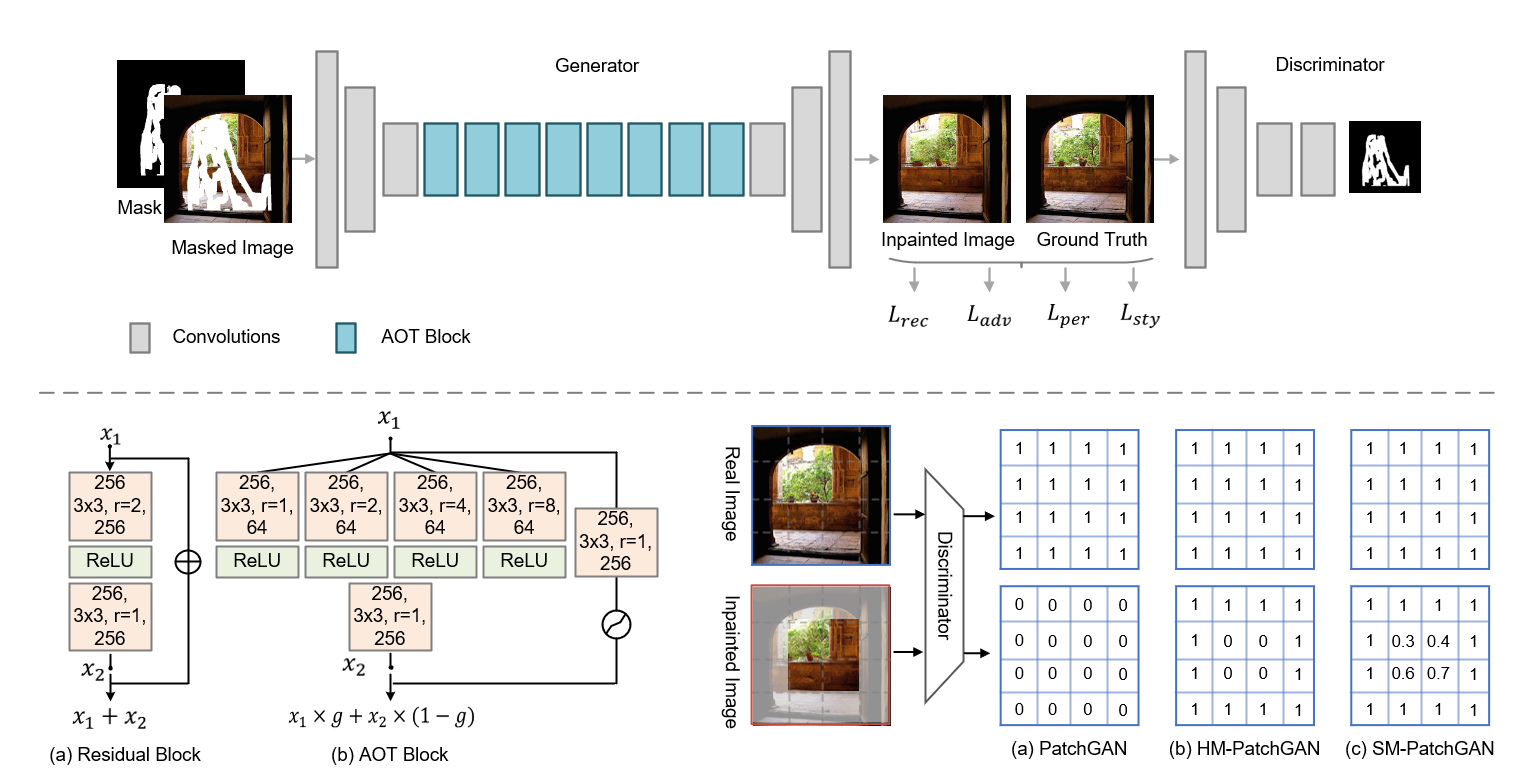

Aggregated Contextual Transformations for High-Resolution Image Inpainting

Yanhong Zeng, Jianlong Fu, Hongyang Chao, Baining Guo TVCG, 2023 project page / arXiv / video 1 / video 2 / code

In AOT-GAN, we propose aggregated contextual transformations and a novel mask-guided GAN training strategy for high-resolution image inpaining. |

|

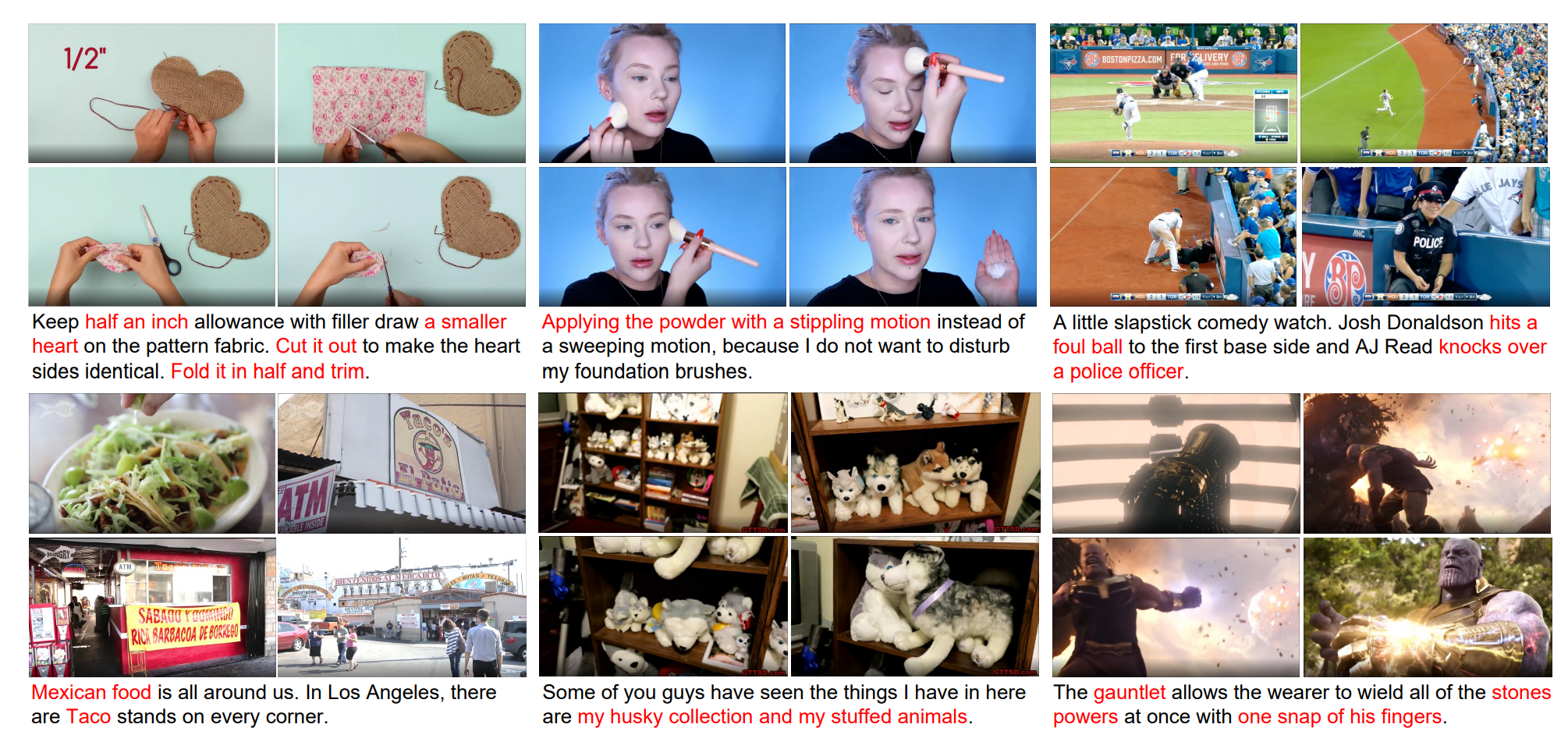

Yanhong Zeng*, Hongwei Xue*, Tiankai Hang*, Yuchong Sun*, Bei Liu, Huan Yang, Jianlong Fu, Baining Guo CVPR, 2022 arXiv / video / code

We collect a large dataset which is the first high-resolution dataset including 371.5k hours of 720p videos and the most diversified dataset covering 15 popular YouTube categories. |

|

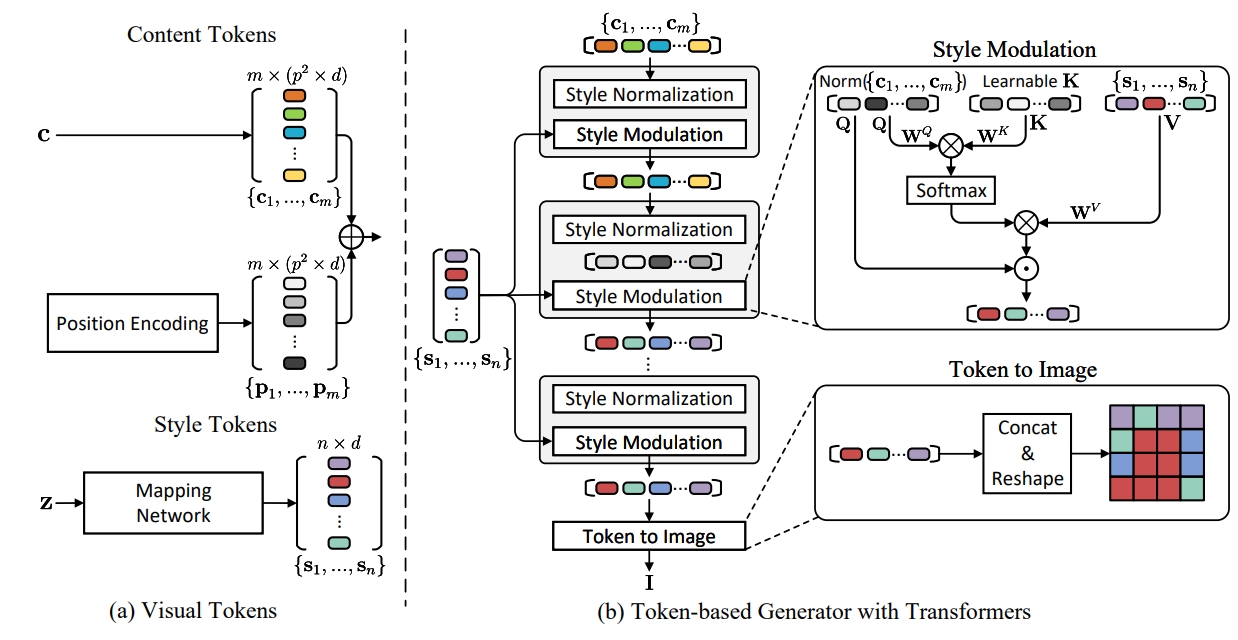

Yanhong Zeng, Huan Yang, Hongyang Chao, Jianbo Wang, Jianlong Fu NeurIPS, 2021 arXiv We propose a token-based generator with Transformers for image synthesis. We present a new perspective by viewing this task as visual token generation, controlled by style tokens. |

|

Yanhong Zeng, Hongyang Chao, Jianlong Fu ECCV, 2020 project page / arXiv / video 1 / more results / code

We propose STTN, the first transformer-based model for high-quality image inpainting, setting a new state-of-the-art performance. |

|

Yanhong Zeng, Hongyang Chao, Jianlong Fu, Baining Guo CVPR, 2019 project page / arXiv / video / code

We propose PEN-Net, the first work that is able to conduct both semantic and texture inpainting. To achieve this, we propose cross-layer attention transfer and pyramid filling strategy. |

Working Experience |

|

Ant Group

Researcher, 2025.04 ~ present |

|

Shanghai AI Laboratory

Researcher, 2022.07 ~ 2025.03 |

|

Microsoft Research Asia (MSRA)

Research Intern, 2018.06 ~ 2021.12 Research Intern, 2016.06 ~ 2017.06 |

Projects |

|

CCTV Animation Production: "Poems of Timeless Acclaim"

Tech Lead Designed and delivered an end-to-end AI animation pipeline for a national-scale production. Achieved global impact with broadcast in 10+ languages across 70+ platforms, amassing 100M+ views. |

|

MagicMaker

Product Owner & Tech Lead MagicMaker is a user-friendly AI platform that enables seamless image generation, editing, and animation. It empowers users to transform their imagination into captivating cinema and animations with ease. |

|

OpenMMLab/MMagic

Lead Core Maintainer OpenMMLab Multimodal Advanced, Generative, and Intelligent Creation Toolbox. Unlock the magic 🪄: Generative-AI (AIGC), easy-to-use APIs, awesome model zoo, diffusion models, for text-to-image generation, image/video restoration/enhancement, etc. |

Miscellanea |